Raise your hand if you, in time of walks with a mask, have never returned a greeting without the certainty of who was actually greeting or if you have never taken advantage of the half-covered face to hide an expression of disappointment. Today we are talking about this, the mask factor that hides many snares in everyday relationships and that puts to the test also tools and software that are usually used for the recognition of facial expressions.

In this regard, we wanted to do an experiment and study how the instrumentation we use to decode basic emotions through facial microexpressions (if you missed it, read our article “Face Reading: understanding emotions to satisfy your customers”) processes the information in the presence of the mask and how reliable they can be.

But before getting to the heart of the research conducted on face reading, it is perhaps useful to take a small step back.

Why is it important to understand emotions to design effective user experiences?

Designing user experiences that incorporate people’s point of view is the only way to create satisfactory solutions that achieve the goals for which they were designed. However, there are mental, emotional and irrational factors that are inherently difficult to communicate in words, and yet fundamental in choosing a product or service.

Is it therefore possible to know objectively how people feel, in a way that is not mediated by verbal communication? Well yes.

There are tools and skills that, combined together, allow us to investigate the unconscious perceptions and emotions of consumers and to understand the feelings and emotional reactions when they use a product or service, or observe a site, a mobile app, a video or a packaging.

The detection of facial expressions with a mask: our research

Given the necessary premises, we can now get to the heart of the experiment we conducted to test the instrumentation we use for face reading and the detection of facial micro expressions.

The research involved 140 people, 70 with masks and 70 without. Each of these people had to watch a video lasting 30 minutes; meanwhile, through a software, facial expressions were recorded for every second (we leave it to you to imagine the amount of data that we had to process later …).

The expressions developed by the software were attributable to 8 categories: “neutral”, “happiness”, “sadness”, “anger”, “surprise”, “fear”, “disgust”, “contempt”.

What we expected to find was a lower value associated with the “neutral” condition in subjects without the mask compared to those with the mask, that is, that the emotions in the absence of an individual protective device were more evident and therefore recorded a value greater.

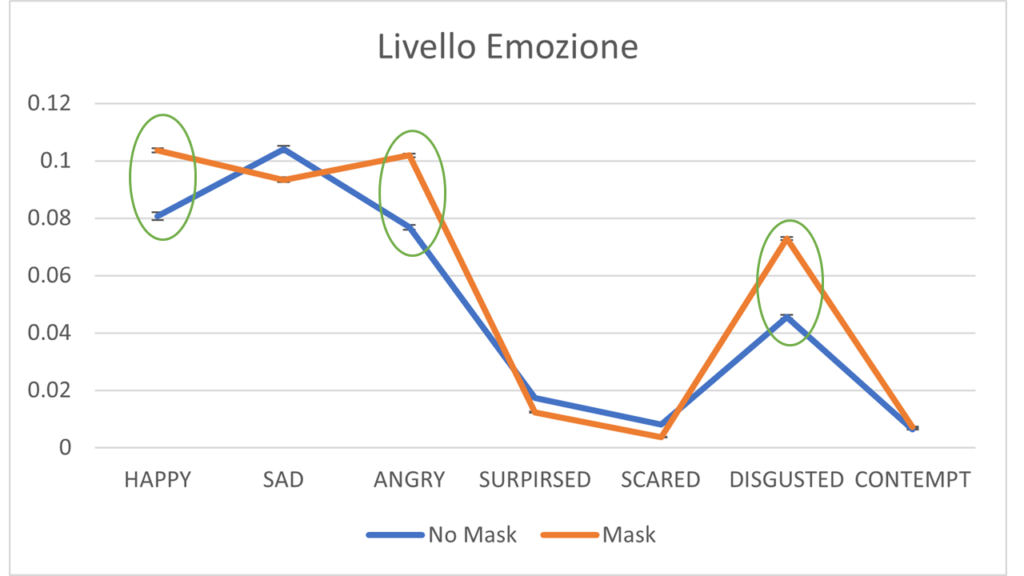

Instead, after adjusting the dataset, observing how the averages of the data of the people with the mask behaved in comparison to those without, for each single emotion, we could observe that it was the people with the mask who had less activation for the emotional state coded as “neutral”. Going into more detail, it was observed that people with masks had a significantly greater activation for the emotions of “happiness” (and therefore a lower activation for “sadness”), “anger” and “disgust” (Fig.1).

Having worked on the averages, we went to observe the “good data”, that is, how many times the software recorded an emotion and how many times it failed. There were no major differences in the numerical quantity; some less data was recorded for subjects with masks, except for the “happiness” emotion where we had more data. In addition, the number of subjects on which emotion was recorded with a frequency greater than half of the data collected plus one was similar for both groups. Therefore the amount of “good data” found was comparable between the two groups.

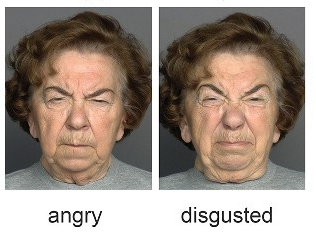

There is still little information in the literature on this topic. Some software have begun to implement databases by digitally inserting the masks on the photos they had in memory, even if until now this information is not yet completely sufficient for a precise detection of emotions (Bo, Yang & Wu, Jianming & Hattori, Jan; 2020). It has generally been seen that the margin of error of software increases when they have to encode information from people with masks and this is in accordance with the slight gap of good data collected in our case. It has also been seen that, when people have to code facial expressions, they can easily recognize emotions such as anger and disgust, which are often also exchanged between them; more difficult, however, to recognize the emotion of happiness (Carbon C-C, 2020).

Try it yourself: could you distinguish which expression of the two is anger and which disgust?

We will give you the solution at the end of this article.

Are current software therefore able to detect and understand the emotions of the wearer of the mask?

From what we have been able to observe with our data, it seems that the eye area is, yes, sufficient for the detection of the emotions of anger and disgust, but also for that of happiness; indeed, these emotions are even overestimated (Fig. 1). This could be due to the fact that, when the software processes both the upper and lower information, if these are not concordant with each other, the intensity of the emotion detection is lower or even vanishes, compared to the processing of the upper part only.

In conclusion, ideally software that has in its database a lot of information about the faces covered by the mask should be used. Until then, however, we can still collect and analyze information on emotions in subjects with the mask, the important thing is to consider that the data collected could represent an overestimation of some emotions, since the processing relates to a partial data.

Bo, Yang & Wu, Jianming & Hattori, Gen. (2020). Facial Expression Recognition with the advent of face masks. 10.1145/3428361.3432075.

Carbon C-C (2020) Wearing Face Masks Strongly Confuses Counterparts in Reading Emotions. Front. Psychol. 11:566886. doi: 10.3389/fpsyg.2020.566886

Ah, yes! The solution!

Here it is:

If you have not been able to guess it, remember that it is very easy to confuse them with only the eyes as a parameter. So we too, like software, can make mistakes. And if you find someone on the street who seems to be angry with you, maybe they just ate a not-too-palatable candy.